Explore this notebook interactively:

Representing an EO data cube#

An EO data cube stores and organizes EO data, and possibly also additional data from other sources (e.g. a digital surface model). It falls entirely within the image domain of the framework and is constructed and managed by a software expert.

This notebook describes the data structures to represent an EO data cube in semantique, and how to interact with it.

Content#

Prepare#

Import packages:

[1]:

import semantique as sq

[2]:

import geopandas as gpd

import json

Structure#

There is not a single standard on how an EO data cube should be implemented in practice. Many approaches exist, which we refer to as different configurations of an EO data cube. The structure of semantique is flexible and does not put any limitations on what the configuration of an EO data cube should be. Instead, it allows expert users to create their own class that represents their specific EO data cube configuration. Such a class should always inherit from the abstract base class Datacube that semantique contains. Such a base class can be seen as a blueprint for any object that represents an EO data cube. Do note that an EO data cube representation in semantique never contains the data values of the cube themselves. Instead, it will have some property that allows the retriever to access the actual storage location. How this property looks like will depend on the configuration of the EO data cube.

[3]:

base = sq.datacube.Datacube()

To ensure interoperability, an EO data cube representation of any configuration is required to contain two standard elements. The first one is the layout, which is generally provided when initializing an instance of an EO data cube class and stored inside the layout property. The layout is a repository containing a description of the content of the EO data cube. Each distinct data layer in the cube (e.g. the different spectral bands) should be described by a dedicated metadata object in the layout that can be indexed by a unique name assigned to that layer. This metadata object provides information about the values in the layer, but also about where it can be found inside the structure of the cube. In semantique, the layout is always expected to have the structure of a Python dictionary. In this dictionary, the names of data layers are the keys and the metadata objects (which themselves are a dictionary as well) are the values. The dictionary may have a nested structure formalizing a categorization of data layers, e.g. the metadata object of the data layer “blue band” can be indexed by the key pair (“reflectance”, “blue band”) instead of only “blue band”.

[4]:

hasattr(base, "layout")

[4]:

True

The second required element is the retriever function, which should be implemented as the retrieve() method of an EO data cube class. The retriever is an interface that exposes the content of the EO data cube to the other components in the querying framework. Given a textual reference to a data layer and a spatio-temporal extent, it is able to retrieve the corresponding data values from the cube, and return them in a three-dimensional, spatio-temporal array. The exact implementation of the retriever depends on the configuration of the EO data cube, but the inputs it accepts and the output it returns does not. Hence, the retriever serves as a standardized API that allows other components of the framework to access the content of the EO data cube independent from its configuration.

[5]:

hasattr(base, "retrieve")

[5]:

True

It is important to note here that when evaluating a query recipe the query processor does not care at all what the configuration of the EO data cube is and how it retrieves data. It only interacts with the retriever function, and thus only cares about what input the retriever function accepts, and in what format it returns the retrieved data.

The Opendatacube configuration#

Through the Opendatacube sub-class semantique has built-in support for EO data cubes that are deployed using the Open Data Cube software. It contains a retriever that knows how to interact with the Python API of Open Data Cube.

To initialize a representation of such a data cube, you need to provide a valid layout dictionary and a Datacube object that allows the retriever to interact with the actual data storage. A valid layout for this configuration means that the metadata objects for each data layer should contain at least the following keys and values:

name: The name of the data layer inside the Open Data Cube. In Open Data Cube terminology this is called the measurement.product: The product in which the data layer is stored inside the Open Data Cube.type: The value type of the values in the data layer. There are no limits to what the value type categorization should be. If it differs from the semantique value types, this needs to be mapped through a configuration parameter when initializing the instance, see below.values: An overview of the values in the data layer. If the values are numerical, this should be a dictionary containing the keys “min”, “max” and “precision”. If the values are categorical, this should be a list of dictionaries, with each dictionary referring to single category and containing the keys “id”, “label” and “description”.

When initializing the instance, you should also provide the timezone in which the temporal coordinates inside the data cube are stored. By default, semantique assumes this is UTC. Additionaly, you can provide several configuration parameters that tune the data retrieval process, see the documentation of the Opendatacube class for details.

Since we do not have an Open Data Cube running in our semantique development environment, nor have a Open Data Cube layout file stored inside the package, we can not show code that initializes and interacts with a Opendatacube instance. However, the following code snippet should give an indication:

import datacube

import json

import semantique as sq

odc_obj = datacube.Datacube()

with open("somedir/layout.json", "r") as file:

dc = sq.datacube.Opendatacube(json.load(file), connection = odc_obj)

The Geotiffarchive configuration#

As a second built-in EO data cube configuration semantique contains the so-called Geotiffarchive. This configuration is targeted at small data cubes that are nothing more than a zipped archive of multiple GeoTIFF files, each having two spatial and one temporal dimension. Given the nature of GeoTIFF, the time dimension will be recognized as “band dimension”, and therefore the time coordinates should be stored as band description for each band. Be aware that the Geotiffarchive configuration in semantique is mainly meant for demonstration purposes, and the retriever function is not optimized for performance (e.g. the full GeoTIFF is loaded into memory before subsetting in space and time).

To initialize a representation of a Geotiffarchive data cube, you need to provide a valid layout dictionary and the filepath to the zipped archive of GeoTIFF files. A valid layout for this configuration means that the metadata objects for each data layer should contain at least the following keys and values:

file: The filename of the GeoTIFF file in which the values for the data layer are stored.type: The value type of the values in the data layer. There are no limits to what the value type categorization should be. If it differs from the semantique value types, this needs to be mapped through a configuration parameter when initializing the instance, see below.values: An overview of the values in the data layer. If the values are numerical, this should be a dictionary containing the keys “min”, “max” and “precision”. If the values are categorical, this should be a list of dictionaries, with each dictionary referring to single category and containing the keys “id”, “label” and “description”.

When initializing the instance, you should also provide the timezone in which the temporal coordinates inside the data cube are stored. By default, semantique assumes this is UTC. Additionaly, you can provide several configuration parameters that tune the data retrieval process, see the documentation of the Geotiffarchive class for details.

Now we can initialize an instance of the Geotiffarchive class using the small demo data cube that is contained in semantique. The layout file we use looks like this.

[6]:

with open("files/layout_gtiff.json", "r") as file:

dc = sq.datacube.GeotiffArchive(json.load(file), src = "files/layers_gtiff.zip")

[7]:

isinstance(dc, sq.datacube.Datacube)

[7]:

True

To interact with the retriever, we need to provide an array that specifies the spatio-temporal extent inside which the requested data should be retrieved.

[8]:

from semantique.processor.utils import parse_extent

[9]:

space = sq.SpatialExtent(gpd.read_file("files/footprint.geojson"))

time = sq.TemporalExtent("2019-01-01", "2020-12-31")

extent = parse_extent(space, time, spatial_resolution = [-10, 10], crs = 3035)

[10]:

colortype = dc.retrieve("appearance", "colortype", extent = extent)

[11]:

colortype

[11]:

<xarray.DataArray 'colortype' (time: 3, y: 563, x: 576)>

array([[[29., 29., 29., ..., 29., 29., 29.],

[29., 29., 29., ..., 29., 29., 29.],

[29., 29., 29., ..., 5., 29., 29.],

...,

[ 5., 5., 5., ..., 29., 29., 29.],

[ 5., 5., 5., ..., 29., 29., 29.],

[ 5., 29., 29., ..., 29., 29., 29.]],

[[ 4., 4., 4., ..., 4., 1., 1.],

[ 6., 4., 4., ..., 3., 1., 4.],

[ 6., 4., 4., ..., 4., 4., 4.],

...,

[ 1., 1., 1., ..., 1., 1., 1.],

[ 1., 1., 1., ..., 1., 1., 1.],

[ 1., 1., 1., ..., 1., 1., 1.]],

[[21., 21., 21., ..., 4., 5., 4.],

[21., 21., 21., ..., 3., 6., 4.],

[21., 21., 21., ..., 3., 4., 4.],

...,

[ 3., 3., 3., ..., 3., 27., 27.],

[ 3., 27., 3., ..., 27., 27., 27.],

[27., 3., 7., ..., 27., 27., 27.]]])

Coordinates:

spatial_ref int32 0

* x (x) float64 4.53e+06 4.53e+06 ... 4.536e+06 4.536e+06

* y (y) float64 2.697e+06 2.697e+06 ... 2.691e+06 2.691e+06

temporal_ref int32 0

* time (time) datetime64[ns] 2019-12-15T10:17:33.408715 ... 2020-...

spatial_feats (y, x) float64 1.0 1.0 1.0 1.0 1.0 ... 1.0 1.0 1.0 1.0 1.0

Attributes:

AREA_OR_POINT: Area

scale_factor: 1.0

add_offset: 0.0

_FillValue: 1.7976931348623157e+308

value_type: ordinal

value_labels: {1: 'SVHNIR', 2: 'SVLNIR', 3: 'AVHNIR', 4: 'AVLNIR', 5: '...[12]:

colors = {

"Strong vegetation with high NIR": "darkgreen",

"Strong vegetation with low NIR": "forestgreen",

"Average vegetation with high NIR": "limegreen",

"Average vegetation with low NIR": "mediumseagreen",

"Weak vegetation": "springgreen",

"Shadow area with vegetation": "palegreen",

"Shrub Rangeland with High NIR": "darkgoldenrod",

"Shrub Rangeland with Low": "goldenrod",

"Herbaceous Rangeland": "gold",

"Weak Rangeland": "khaki",

"Pit or bog": "olive",

"Greenhouse": "yellowgreen",

"Very bright barren land or built-up": "darkred",

"Bright barren land or built-up": "firebrick",

"Strong barren land or built-up": "red",

"Average barren land or built-up": "tomato",

"Dark barren land or built-up": "salmon",

"Weak barren land or Shadow area with Barren Land": "lightsalmon",

"Near InfraRed-Peaked Barren land and Built-up": "coral",

"Burned area": "yellow",

"Deep water or shadow": "dodgerblue",

"Shallow water or shadow": "deepskyblue",

"Turbid water or shadow": "lightskyblue",

"Salty Shallow Water": "lightblue",

"Cloud": "lightgrey",

"Smoke plume": "dimgrey",

"Thin clouds over vegetation": "aquamarine",

"Thin clouds on water area or Barren land or Built-up areas": "paleturquoise",

"Snow or water ice": "snow",

"Snow shadow": "mistyrose",

"Shadow areas": "slategrey",

"Flame": "plum",

"Unknown": "black",

}

[13]:

values = list(range(1, 34))

levels = [x - 0.5 for x in values + [max(values) + 1]]

legend = {"ticks": [1, 10, 20, 30], "label": "colortype"}

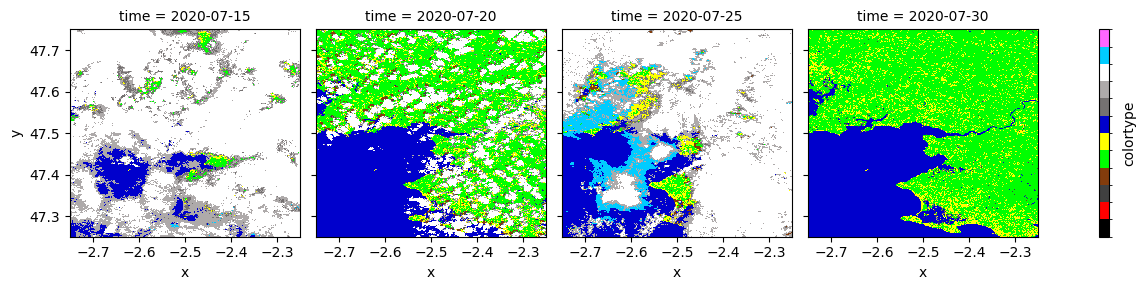

colortype.plot(x = "x", y = "y", col = "time", levels = levels, colors = list(colors.values()), cbar_kwargs = legend)

[13]:

<xarray.plot.facetgrid.FacetGrid at 0x1e7da91caf0>

The STACCube configuration#

Semantique contains a third built-in EO data cube configuration called STACCube. This configuration is targeted at ad-hoc data cubes built from the results of a STAC metadata search. Contrary to the Opendatacube and the Geotiffarchive, it doesn’t require the pre-organising the data (e.g. to ingest the data into a database in case of the Opendatacube or to create a temporally stacked geotiff in case of the Geotiffarchive). Instead, the STACCube contains a retriever that knows how to fetch assets linked in STAC search results into a data cube. If the linked assets are provided in Cloud Optimizes GeoTiff format (CoGs), the STACCube offers a high-performance option for fetching and subsequently processing earth observation data only for the area and timespan of interest. To initialize a representation of such a data cube, you need to provide the STAC search results and valid layout dictionary. Semantique contains a third built-in EO data cube configuration called STACCube. This configuration is targeted at ad-hoc data cubes built from the results of a STAC metadata search. Contrary to the Opendatacube and the Geotiffarchive, it doesn’t require pre-organising the data (e.g. to ingest the data into a database in case of the Opendatacube or to create a temporally stacked geotiff in case of the Geotiffarchive). Instead, the STACCube contains a retriever that knows how to fetch assets linked in STAC search results into a data cube. If the linked assets are provided in Cloud Optimizes GeoTiff format (CoGs), the STACCube offers a high-performance option for fetching and subsequently processing earth observation data only for the area and timespan of interest. To initialize a representation of such a data cube, you need to provide the STAC search results and valid layout dictionary. The STAC search results can be provided as an pystac.item_collection.ItemCollection or as an iterable of pystac.item.Item. It is important that the properties object for each item contains a datetime attribute that is non-null. Otherwise, the data can’t be indexed temporally. The spatial index will be created based upon the asset-specific proj:epsg, proj:bbox, proj:shape, proj:transform attributes.

A valid layout for this configuration means that the metadata objects for each data layer should contain at least the following keys and values:

name: The name of the data layer, called asset in STAC terminology.type: The value type of the values in the data layer. There are no limits to what the value type categorization should be. If it differs from the semantique value types, this needs to be mapped through a configuration parameter when initializing the instance, see below.values: An overview of the values in the data layer. If the values are numerical, this should be a dictionary containing the keys “min”, “max” and “precision”. If the values are categorical, this should be a list of dictionaries, with each dictionary referring to single category and containing the keys “id”, “label” and “description”.

Additionally, you can provide several configuration parameters that tune the data retrieval process, see the documentation of the STACCube class for details. Different to the Opendatacube and the Geotiffarchive, STACCube doesn’t require to specify the timezone in which the temporal coordinates inside the data cube are stored. By default, the timezone is inferred from the STAC search results and converted to UTC.

We will demonstrate the initialization of an instance of the STACCube class using some Sentinel-2 data fetched from the registry of open data on AWS. The layout file we use looks like this.

[14]:

from pystac_client import Client

from shapely.geometry import box

# define temporal & spatial range to perform STAC query

xmin, ymin, xmax, ymax = -2.75, 47.25, -2.25, 47.75

aoi = box(xmin, ymin, xmax, ymax)

t_range = ["2020-07-15", "2020-08-01"]

# STAC-based metadata retrieval

catalog = Client.open("https://earth-search.aws.element84.com/v1")

query = catalog.search(

collections="sentinel-2-l2a",

datetime=t_range,

limit=100,

intersects=aoi

)

item_coll = query.item_collection()

# list results - part I

items = list(query.items())

print(f"Found: {len(items):d} items.")

# list results - part II

stac_json = query.item_collection_as_dict()

gdf = gpd.GeoDataFrame.from_features(stac_json, "epsg:4326")

gdf

Found: 8 items.

[14]:

| geometry | created | platform | constellation | instruments | eo:cloud_cover | proj:epsg | mgrs:utm_zone | mgrs:latitude_band | mgrs:grid_square | ... | s2:datastrip_id | s2:granule_id | s2:reflectance_conversion_factor | datetime | s2:sequence | earthsearch:s3_path | earthsearch:payload_id | earthsearch:boa_offset_applied | processing:software | updated | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | POLYGON ((-3.00025 47.85369, -3.00025 46.86571... | 2023-09-09T07:24:43.535Z | sentinel-2b | sentinel-2 | [msi] | 0.000680 | 32630 | 30 | T | WT | ... | S2B_OPER_MSI_L2A_DS_S2RP_20230503T232911_S2020... | S2B_OPER_MSI_L2A_TL_S2RP_20230503T232911_A0177... | 0.969660 | 2020-07-30T11:18:07.218000Z | 1 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/b2b3... | True | {'sentinel2-to-stac': '0.1.1'} | 2023-09-09T07:24:43.535Z |

| 1 | POLYGON ((-3.00025 47.85369, -1.53274 47.84432... | 2022-11-06T09:10:59.398Z | sentinel-2b | sentinel-2 | [msi] | 0.044423 | 32630 | 30 | T | WT | ... | S2B_OPER_MSI_L2A_DS_EPAE_20200730T131922_S2020... | S2B_OPER_MSI_L2A_TL_EPAE_20200730T131922_A0177... | 0.969660 | 2020-07-30T11:18:07.217000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/2aa2... | False | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T09:10:59.398Z |

| 2 | POLYGON ((-3.00025 47.85369, -3.00025 46.86571... | 2023-07-18T00:30:34.159Z | sentinel-2a | sentinel-2 | [msi] | 82.378161 | 32630 | 30 | T | WT | ... | S2A_OPER_MSI_L2A_DS_S2RP_20230323T230825_S2020... | S2A_OPER_MSI_L2A_TL_S2RP_20230323T230825_A0265... | 0.968747 | 2020-07-25T11:18:09.991000Z | 1 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/e73f... | True | {'sentinel2-to-stac': '0.1.1'} | 2023-07-18T00:30:34.159Z |

| 3 | POLYGON ((-3.00025 47.85369, -1.53274 47.84432... | 2022-11-06T09:16:29.219Z | sentinel-2a | sentinel-2 | [msi] | 76.555313 | 32630 | 30 | T | WT | ... | S2A_OPER_MSI_L2A_DS_MPS__20200725T120536_S2020... | S2A_OPER_MSI_L2A_TL_MPS__20200725T120536_A0265... | 0.968747 | 2020-07-25T11:18:09.991000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/1ede... | False | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T09:16:29.219Z |

| 4 | POLYGON ((-3.00025 47.85369, -3.00025 46.86571... | 2023-09-09T03:08:50.038Z | sentinel-2b | sentinel-2 | [msi] | 25.680962 | 32630 | 30 | T | WT | ... | S2B_OPER_MSI_L2A_DS_S2RP_20230423T113853_S2020... | S2B_OPER_MSI_L2A_TL_S2RP_20230423T113853_A0176... | 0.968061 | 2020-07-20T11:18:06.203000Z | 1 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/d045... | True | {'sentinel2-to-stac': '0.1.1'} | 2023-09-09T03:08:50.038Z |

| 5 | POLYGON ((-3.00025 47.85369, -1.53274 47.84432... | 2022-11-06T05:16:55.629Z | sentinel-2b | sentinel-2 | [msi] | 19.963404 | 32630 | 30 | T | WT | ... | S2B_OPER_MSI_L2A_DS_EPAE_20200720T131521_S2020... | S2B_OPER_MSI_L2A_TL_EPAE_20200720T131521_A0176... | 0.968061 | 2020-07-20T11:18:06.202000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/ba8f... | False | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T05:16:55.629Z |

| 6 | POLYGON ((-3.00025 47.85369, -3.00025 46.86571... | 2023-09-09T09:10:47.384Z | sentinel-2a | sentinel-2 | [msi] | 85.173172 | 32630 | 30 | T | WT | ... | S2A_OPER_MSI_L2A_DS_S2RP_20230423T090139_S2020... | S2A_OPER_MSI_L2A_TL_S2RP_20230423T090139_A0264... | 0.967606 | 2020-07-15T11:18:09.131000Z | 1 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/61a3... | True | {'sentinel2-to-stac': '0.1.1'} | 2023-09-09T09:10:47.384Z |

| 7 | POLYGON ((-3.00025 47.85369, -1.53274 47.84432... | 2022-11-06T05:17:10.863Z | sentinel-2a | sentinel-2 | [msi] | 81.696101 | 32630 | 30 | T | WT | ... | S2A_OPER_MSI_L2A_DS_SGS__20200715T140105_S2020... | S2A_OPER_MSI_L2A_TL_SGS__20200715T140105_A0264... | 0.967606 | 2020-07-15T11:18:09.130000Z | 0 | s3://sentinel-cogs/sentinel-s2-l2a-cogs/30/T/W... | roda-sentinel2/workflow-sentinel2-to-stac/cbfa... | False | {'sentinel2-to-stac': '0.1.0'} | 2022-11-06T05:17:10.863Z |

8 rows × 42 columns

[15]:

import warnings

# define datacube

with open("files/layout_stac.json", "r") as file:

dc = sq.datacube.STACCube(

json.load(file),

src = item_coll,

dtype="int8",

na_value=0,

)

# use same extents as for STAC query to set up the context for the datacube

space = sq.SpatialExtent(gpd.GeoDataFrame(geometry=[aoi], crs = 4326))

time = sq.TemporalExtent(*t_range)

extent = parse_extent(space, time, spatial_resolution = [-0.001, 0.001], crs = 4326)

# load the data

with warnings.catch_warnings():

warnings.simplefilter("ignore", UserWarning)

data = dc.retrieve("appearance", "scl", extent=extent)

data

[15]:

<xarray.DataArray 'stackstac-e4a20ff0df5f8779104284b323af0f6b' (time: 4,

y: 500, x: 500)>

array([[[9., 9., 9., ..., 9., 9., 9.],

[9., 9., 9., ..., 9., 9., 9.],

[9., 9., 9., ..., 9., 9., 9.],

...,

[6., 6., 6., ..., 9., 9., 9.],

[6., 6., 6., ..., 9., 9., 9.],

[6., 6., 6., ..., 9., 9., 9.]],

[[9., 9., 8., ..., 4., 7., 8.],

[4., 4., 4., ..., 4., 3., 4.],

[4., 4., 4., ..., 4., 3., 7.],

...,

[6., 6., 6., ..., 6., 6., 6.],

[6., 6., 6., ..., 6., 6., 8.],

[6., 6., 6., ..., 9., 9., 9.]],

[[9., 9., 8., ..., 9., 9., 9.],

[9., 9., 9., ..., 9., 9., 9.],

[9., 9., 9., ..., 9., 9., 9.],

...,

[6., 6., 6., ..., 8., 8., 8.],

[6., 6., 6., ..., 8., 6., 8.],

[6., 6., 6., ..., 8., 8., 8.]],

[[4., 4., 4., ..., 4., 4., 4.],

[4., 4., 4., ..., 4., 4., 4.],

[4., 4., 4., ..., 4., 5., 4.],

...,

[6., 6., 6., ..., 6., 6., 6.],

[6., 6., 6., ..., 6., 6., 6.],

[6., 6., 6., ..., 6., 6., 6.]]], dtype=float32)

Coordinates:

* x (x) float64 -2.75 -2.749 -2.748 ... -2.253 -2.252 -2.251

* y (y) float64 47.75 47.75 47.75 47.75 ... 47.25 47.25 47.25

temporal_ref int32 0

* time (time) datetime64[ns] 2020-07-15 2020-07-20 ... 2020-07-30

spatial_feats (y, x) float64 1.0 1.0 1.0 1.0 1.0 ... 1.0 1.0 1.0 1.0 1.0

Attributes:

spec: RasterSpec(epsg=4326, bounds=(-2.75, 47.25, -2.25, 47.75),...

crs: epsg:4326

transform: | 0.00, 0.00,-2.75|\n| 0.00,-0.00, 47.75|\n| 0.00, 0.00, 1...

resolution: 0.001

value_type: ordinal

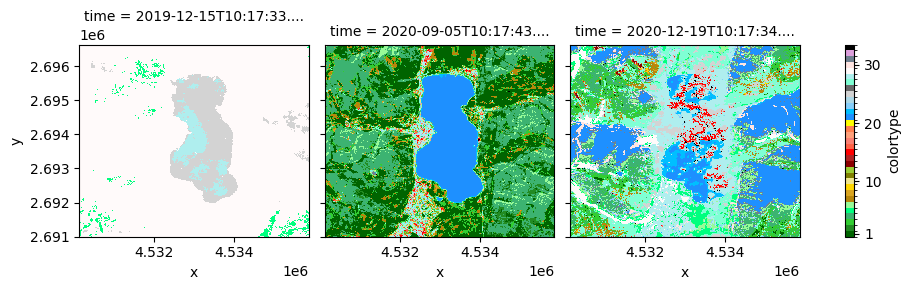

value_labels: {0: 'mask', 1: 'saturated', 2: 'dark', 3: 'shadow', 4: 've...[16]:

import numpy as np

# plot results

colors = {

"no data": [0, 0, 0],

"saturated/defective": [255, 0, 0],

"dark areas": [64, 64, 64],

"cloud shadows": [131, 60, 12],

"vegetation": [0, 255, 0],

"not vegetated": [255, 255, 0],

"water": [0, 0, 204],

"unclassified": [117, 113, 113],

"cloud medium prob": [174, 170, 170],

"cloud high prob": [255, 255, 255],

"thin cirrus": [0, 204, 255],

"snow": [255, 102, 255]

}

values = list(range(0, 12))

levels = [x - 0.5 for x in values + [max(values) + 1]]

colors = list([np.array(x)/255 for x in colors.values()])

legend = {"ticks": [], "label": "colortype"}

data.plot(x = "x", y = "y", col = "time", levels = levels, colors = colors, cbar_kwargs = legend);